Lowering Textual content Enter Lag to Enhance Net Efficiency

This text was co-written by software program engineers Ankit Ahuja and Taras Polovyi.

Many groups give attention to transport and iterating rapidly to ship one of the best person expertise. Nonetheless, the method usually overlooks efficiency, which may trigger the person expertise to progressively degrade over time. Grammarly’s Browser Extensions staff wasn’t an exception. On this publish, we’ll clarify our journey as we realized a efficiency drawback existed, recognized its root causes, fastened the worst bottlenecks, and, lastly, ensured that we received’t face points like this sooner or later.

Over time, customers have talked about to us that utilizing our product slowed down their browsers. The enter latency these customers had been noticing wasn’t frequent in most use circumstances. Nonetheless, it elevated when customers had been working with longer paperwork that included many extra ideas—a typical scenario amongst our energy customers.

The regression was most noticeable by elevated typing lag. This realization was our wake-up name: We needed to prioritize bettering the efficiency of our extension.

How we measured enter latency (or, know your enemy)

At first, we solely had anecdotal proof of customers experiencing important enter lag, and the customers who tended to report this had been working with slower CPUs or lengthy paperwork utilizing the Grammarly browser extension. Right here, we outline textual content enter lag because the delay between the person urgent a key and the corresponding character showing on the display screen. We had been significantly enthusiastic about understanding and decreasing the impression of the Grammarly browser extension on this lag.

Illustration of textual content enter lag on an extended doc with a number of Grammarly ideas

However anecdata wasn’t sufficient: To effectively measure our adjustments’ effectiveness and plan our future work, we needed to set up a baseline that might enable us to check adjustments objectively. We determined to give attention to keyboard enter latency overhead launched by the Grammarly browser extension as our foremost metric.

Within the context of our work, we measured the enter lag within the textual content fields the place Grammarly was actively helping the person. With a view to carry out its job, the extension ought to maintain observe of all textual content adjustments and replace the ideas in actual time. Nonetheless, this introduces the chance of executing many computationally costly operations in a approach that may degrade the person’s expertise.

Lab-based benchmarking

Our eventual answer needed to enable us to repeatedly measure efficiency, monitor the well being of the manufacturing surroundings, and spotlight probably dangerous adjustments earlier than they had been merged into the principle department.

Because the topic of the measurement shouldn’t be a selected internet utility however a browser extension, we couldn’t simply seize the length of a keypress handler execution. This might additionally embody the overhead launched by the host web page scripts, which might make it much less exact.

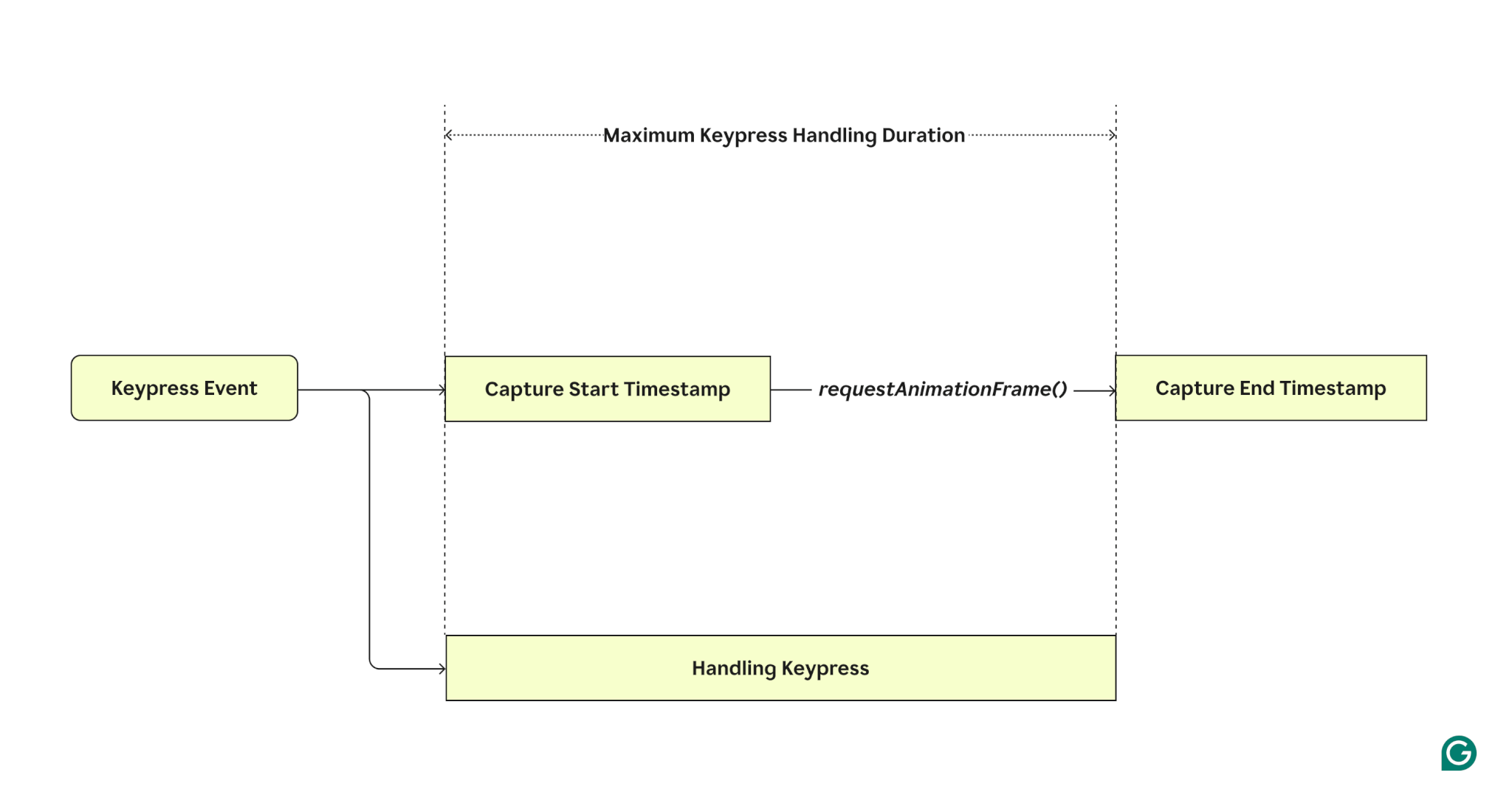

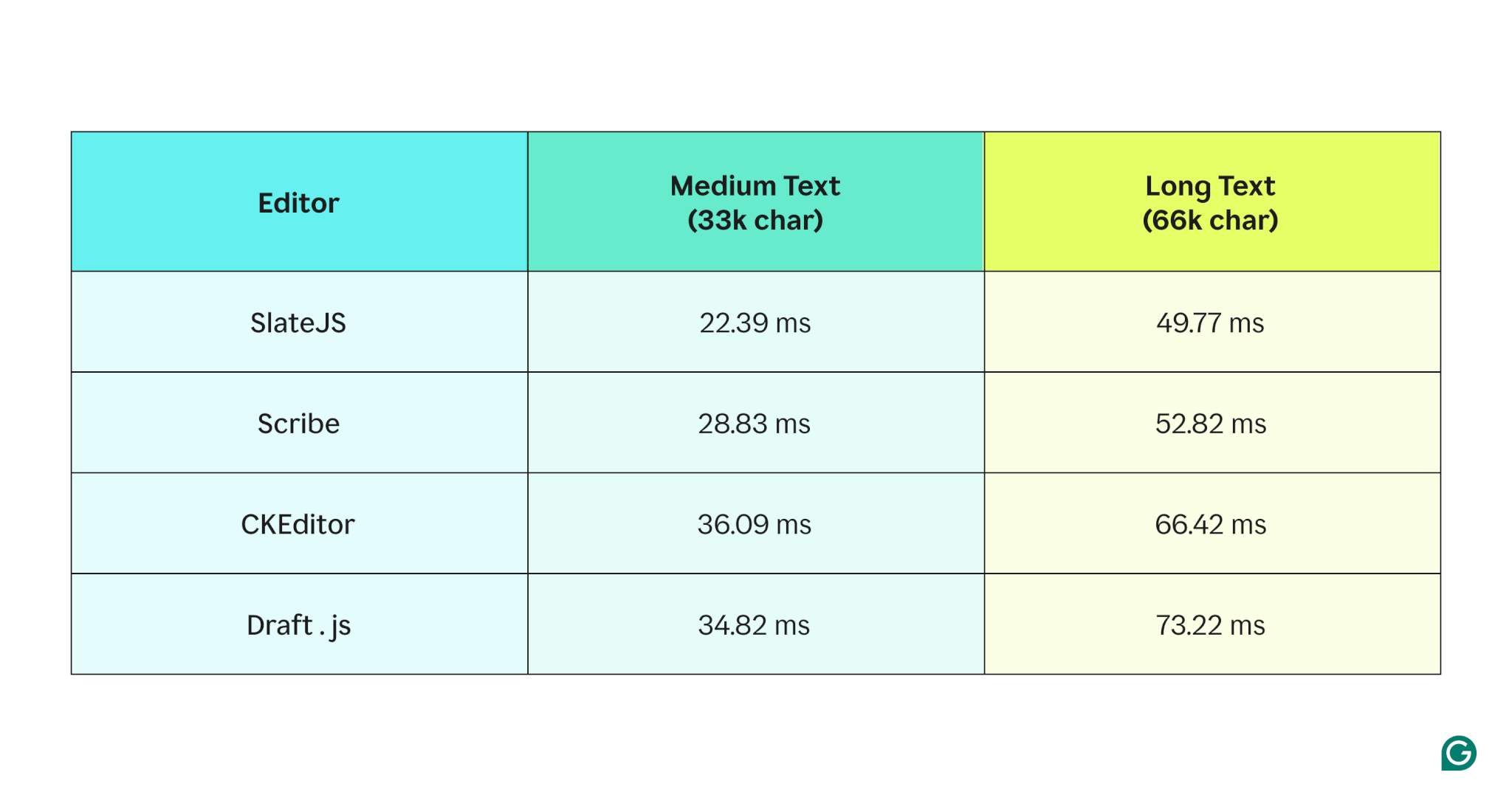

To resolve this dilemma, we arrange an remoted surroundings primarily based on Playwright and Chrome efficiency profiling that might decide the “value” of Grammarly by working a set of frequent person interactions on the identical web site with and with out the Grammarly browser extension put in. After typing every new character, the check captures the length of the principle thread being blocked utilizing window.requestAnimationFrame().

As soon as we executed each situation sufficient occasions to obtain high-confidence knowledge, we subtracted the imply latency of runs with out the extension from the imply latency of runs with the extension put in. This offers us a quantity that represents the overhead launched by the extension. The outcomes had been then visualized in Grafana and used for monitoring the efficiency of recent options.

Preliminary native benchmarking outcomes indicating textual content enter lag added by Grammarly

This answer labored nicely for getting us a selected quantity, however it was remoted from the actual world. Despite the fact that we noticed enhancements within the lab surroundings, we couldn’t use these outcomes to symbolize the impression on precise customers. We needed to arrange efficiency observability for manufacturing.

Measuring enter latency within the discipline

Identical to within the efficiency exams, we measured the time from the occasion firing to the following render to measure particular person keypress efficiency. However on this case, we had been making an attempt to get knowledge on a manufacturing scale, and that comes with distinctive challenges.

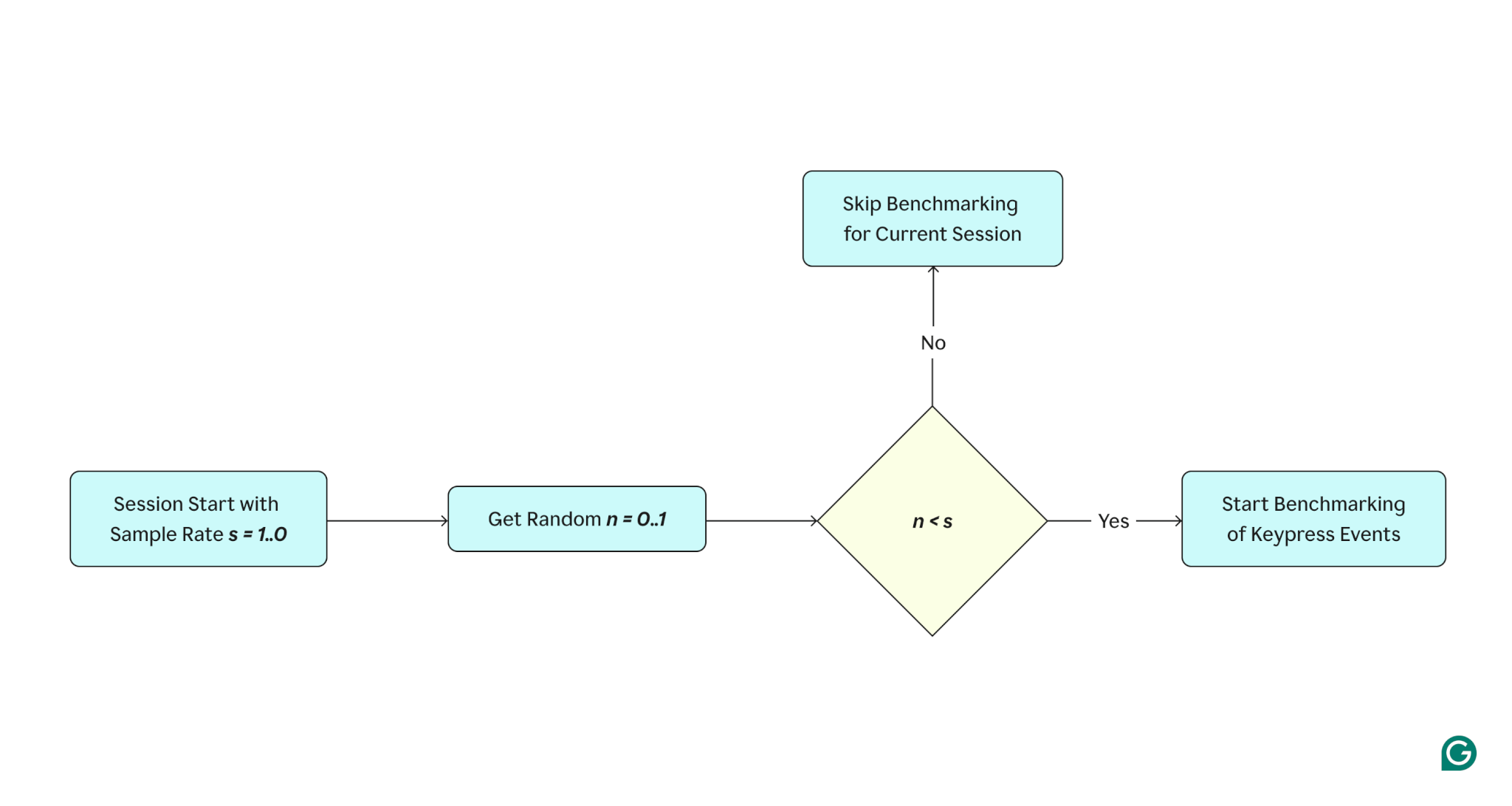

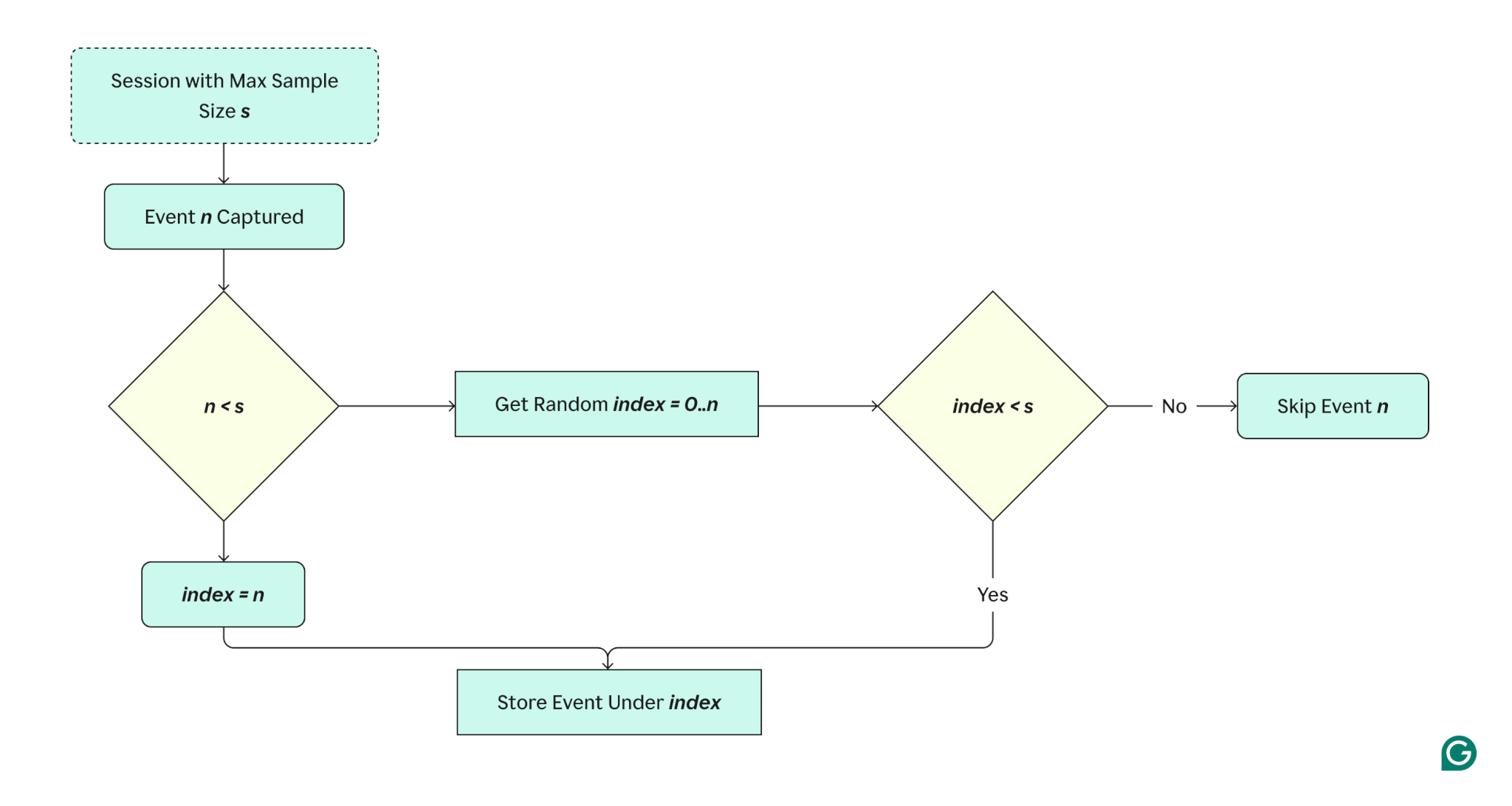

If we had been to seize the timing of each single keypress, we might introduce so much of load on our knowledge infrastructure, however we might seemingly not profit from such a big quantity of knowledge. In consequence, we determined it was finest to pattern the occasions in order that they’d nonetheless be consultant whereas permitting us to course of and retailer solely a vital quantity of knowledge.

Session-level sampling is the only strategy. In the beginning of every enhancing session, we “roll the cube” to randomly decide whether or not we should always take the efficiency measurements. The chance is configurable and varies from 1% to 10% as a result of completely different web sites generate varied quantities of visitors, which suggests it takes completely different quantities of time to realize a high-confidence dataset.

However there was one other drawback: Customers sort completely different quantities of textual content throughout their periods. For instance, one person may have a sluggish setup whereas writing a variety of textual content (a science paper, for instance), whereas 10 customers from the pattern with quicker techniques would possibly solely write small quantities of textual content. In these circumstances, the info from a single energy person would possibly outweigh others, skew the info, and present worse benchmark outcomes.

To unravel this drawback, we restricted the variety of occasions recorded from every session to 10. This meant we additionally wanted to account for the truth that the situations would possibly range inside one session. For instance, the textual content would possibly develop considerably all through an enhancing session, so if we simply captured the primary n occasions, the info could be biased as a result of we wouldn’t symbolize the efficiency points that might come in the course of the later phases of enhancing.

There are fairly just a few methods to handle this sort of drawback. We selected to make use of Reservoir Sampling (particularly, Algorithm R). Reservoir Sampling ensures that every occasion in a given stream has an equal likelihood to be chosen with out figuring out upfront the general variety of occasions within the stream.

The mix of “lab-based” efficiency exams and “real-world” efficiency observability permits us to be extra assured within the adjustments that we make to our codebase day by day.

Analyzing areas of enchancment

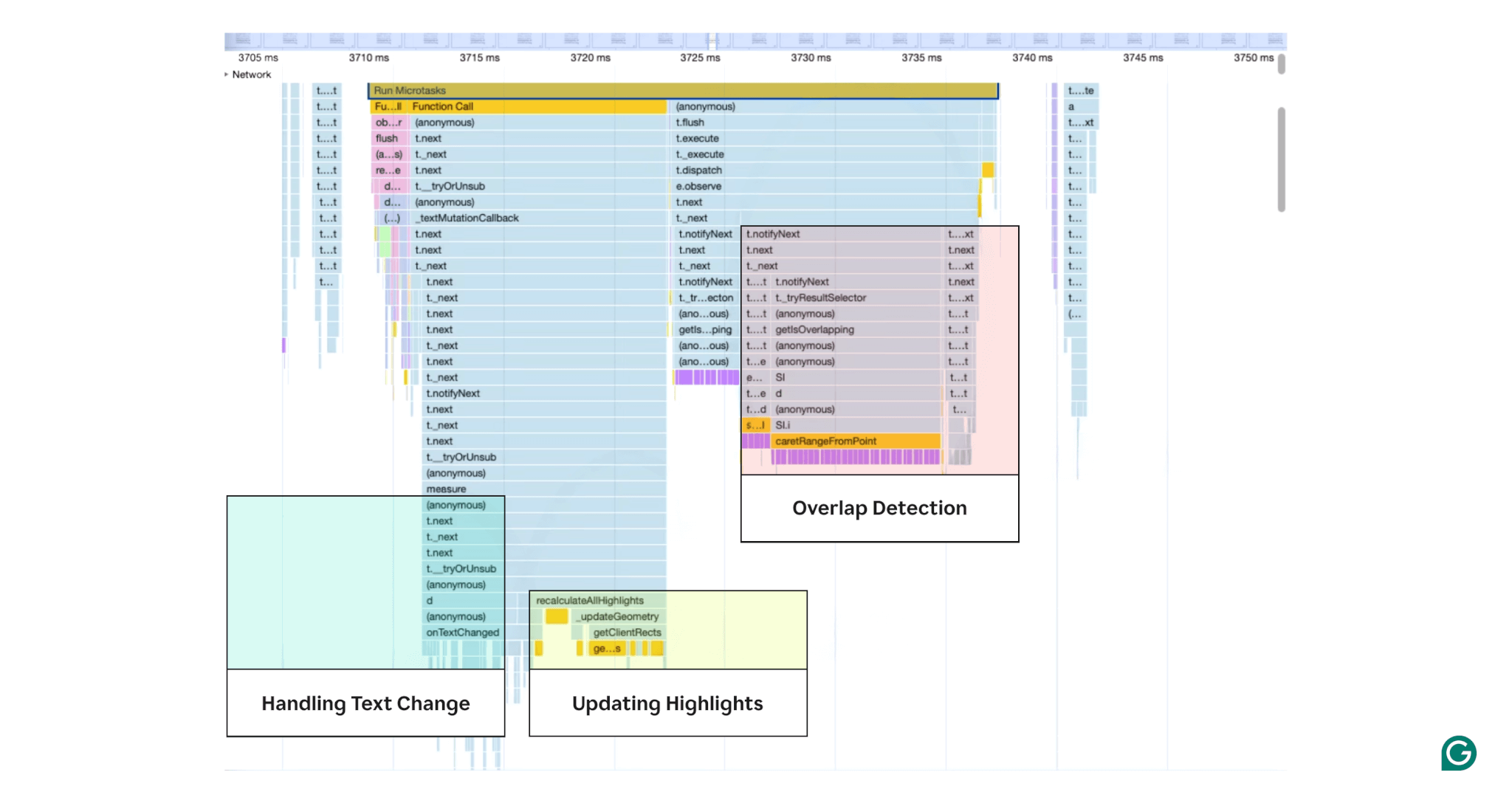

Our subsequent step was to dig into the Chrome efficiency profile with Grammarly put in to establish areas for enchancment.

We quickly realized that we had been working most of the costliest computations on every keypress. Despite the fact that we recognized different alternatives to cut back work on the principle thread, we tagged them as decrease priorities. Our preliminary focus was to establish work accomplished on every keypress and the way we may decrease it.

Instance Chrome profile illustrating heavy areas of computation on keypress

The Chrome profile above illustrates a number of the computations accomplished on every keypress. On this evaluation section, our native benchmarking instruments helped us estimate the impression of those completely different areas. This allowed us to create a construct that eliminated one space of labor at a time, after which to measure the ensuing textual content enter lag.

- Overlap detection with textual content: On every textual content change, we computed whether or not the Grammarly button overlaps with the person’s textual content. If sure, we collapsed the Grammarly button.

- Dealing with textual content adjustments: On every textual content change, we up to date the Grammarly ideas to get rid of redundant ideas and up to date the textual content coordinates for ideas.

- Updating highlights: Because the Grammarly ideas up to date, we might visually replace the way it displayed underlines.

With this evaluation accomplished, we had been capable of begin implementing our options.

Implementation

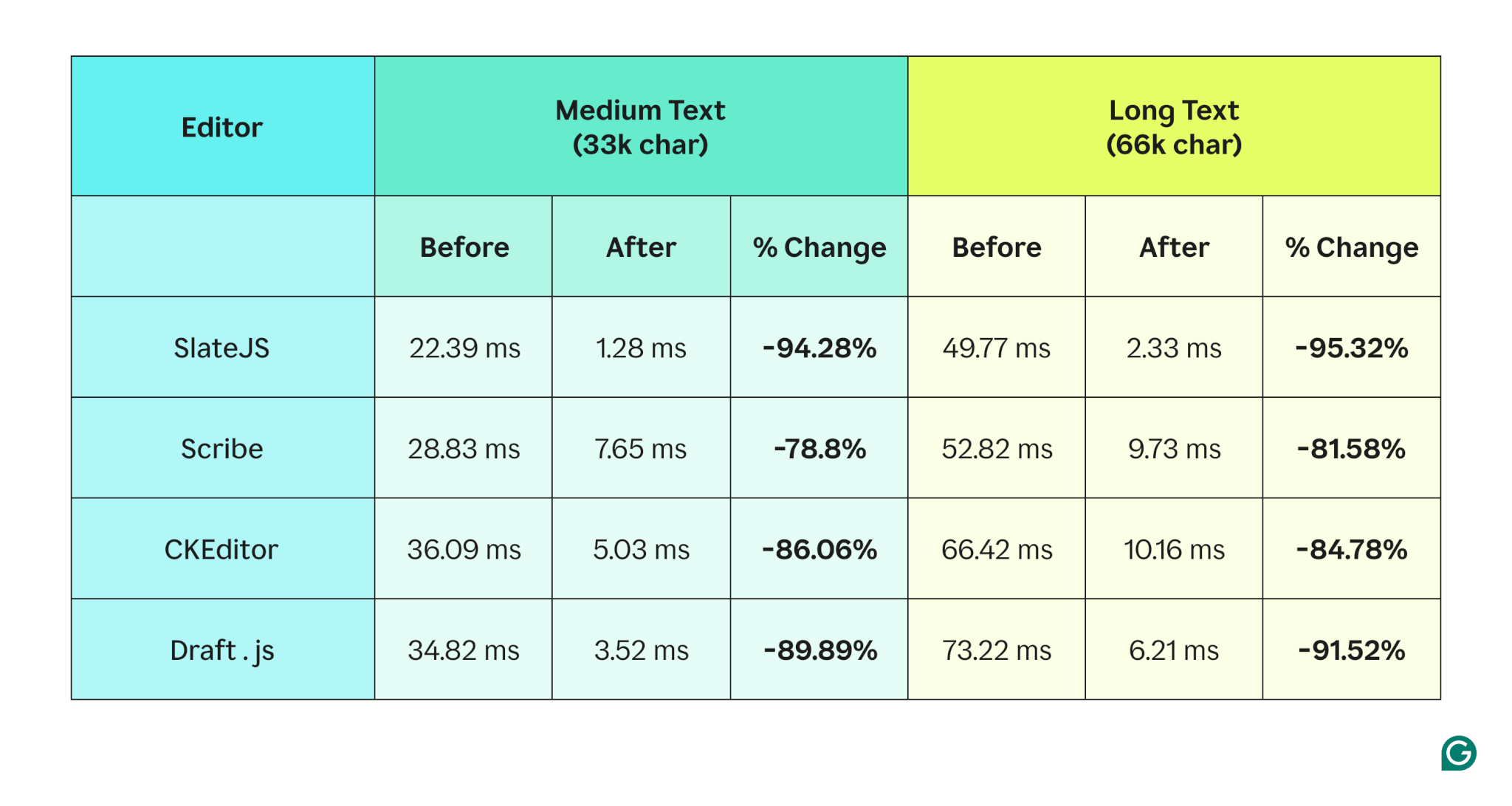

Utilizing our lab and discipline analysis outcomes, we developed three options: a brand new UX for the Grammarly button, optimized underline updates, and asynchronous processing for ideas.

Altering UX to enhance efficiency

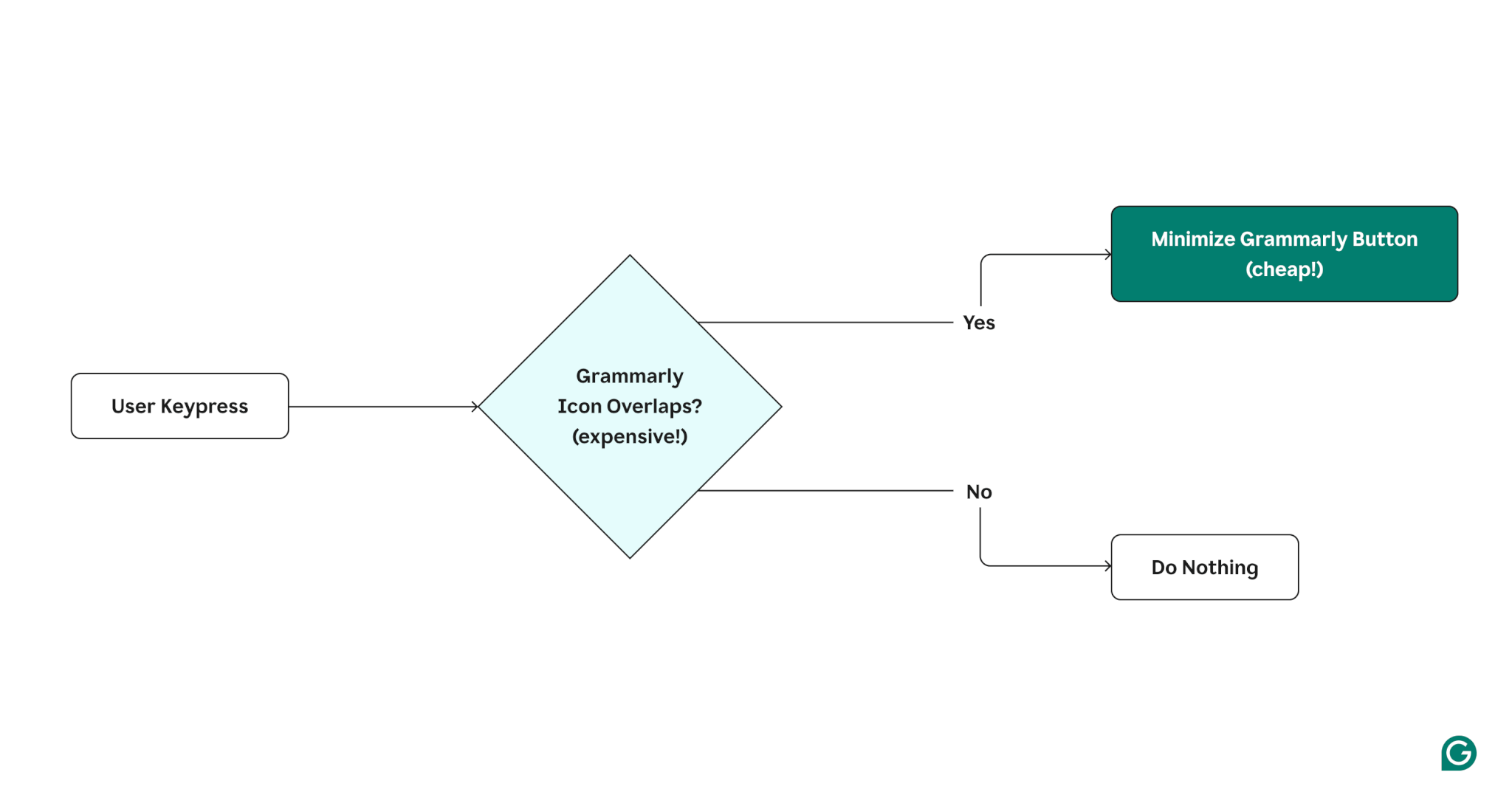

On every textual content change, we computed whether or not the Grammarly button overlaps with the person’s textual content. This was significantly costly on account of calls to the caretRangeFromPoint DOM API.

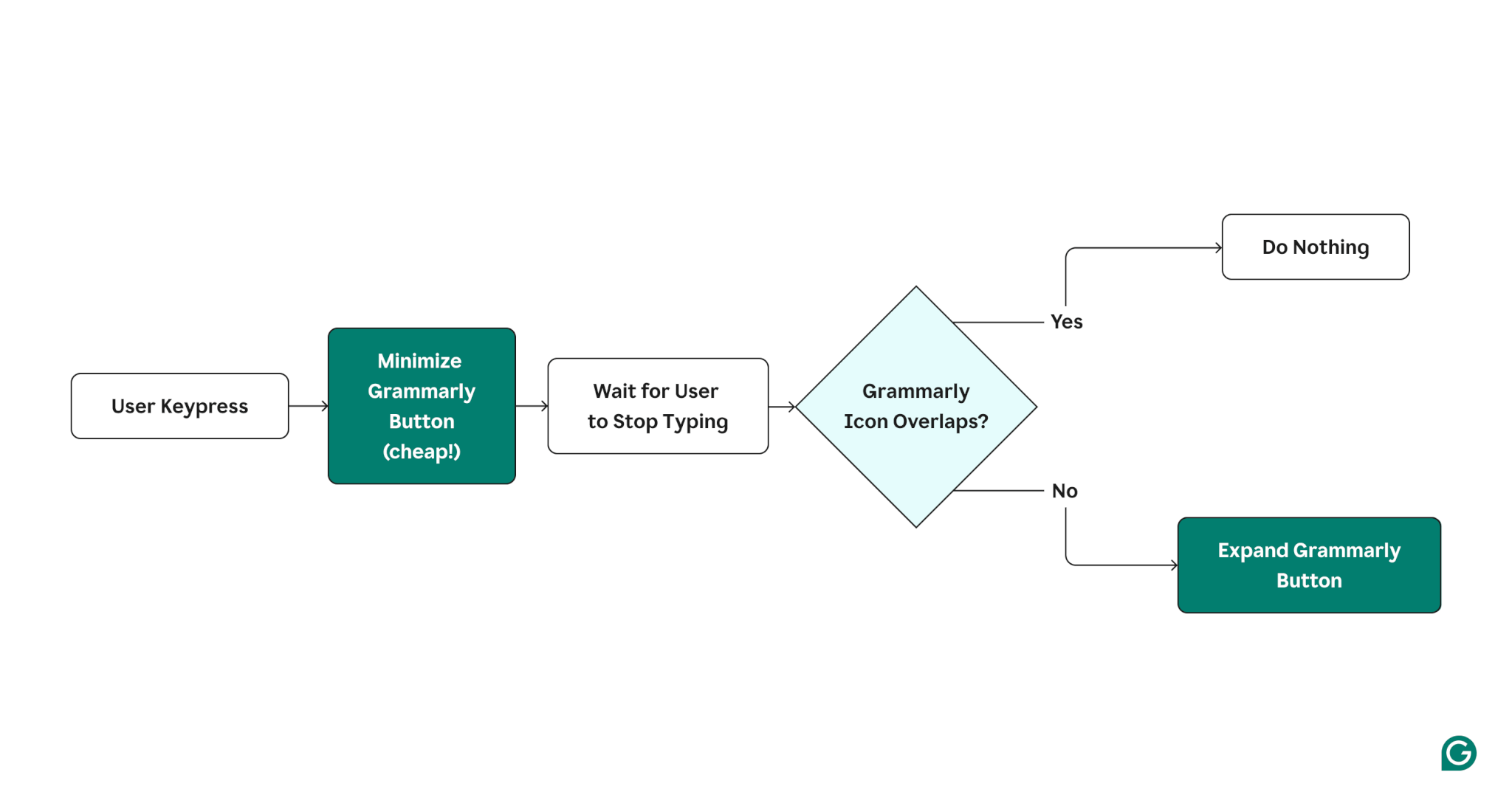

To cut back this value, we determined to experiment with a brand new UX that delayed the overlap examine till the person stopped typing. The visuals under present a diagram of the outdated UX, a diagram of the brand new UX, and an illustration of the brand new UX in motion.

Previous UX (with costly overlap examine on every keypress)

New UX (delaying the overlap examine till the person stops typing)

Illustration of the brand new performant Grammarly minification UX

We hypothesized that customers would possibly desire this new UX because the Grammarly button would collapse by default after they had been typing, making it much less distracting.

Along with the efficiency enhancements, we discovered that customers most well-liked this expertise as a result of it diminished the variety of circumstances the place Grammarly interfered with writing. In consequence, the variety of customers disabling the extension decreased by 9%.

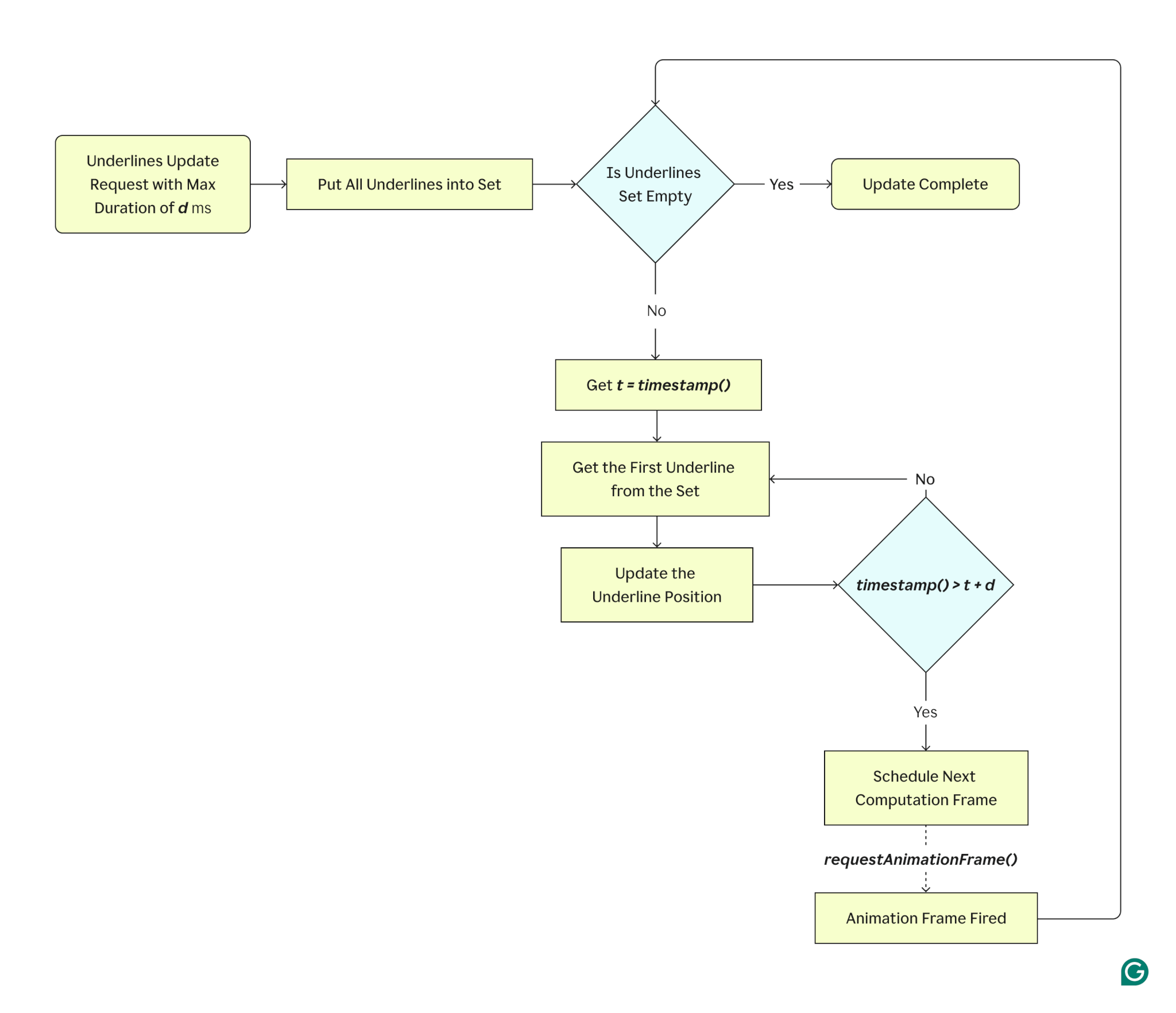

Optimizing underlines updates (or, how one can eat an elephant)

As we talked about beforehand, updating the positions of the underlines (what we name “highlights” internally) was one of the vital important contributors to overhead on keypress occasions.

Typically, Grammarly doesn’t intrude with the precise HTML that represents textual content. To render underlines of their correct place, now we have to create our personal textual content illustration, which permits us to know the place of every character within the textual content on the display screen primarily based on the computed types of the editor.

The challenges begin when a person decides to change the textual content. As soon as this occurs, we have to replace the geometry of every affected underline to match the brand new positions of phrases on the display screen. We have now to make use of a comparatively costly DOM API to detect the place of each underline. This turns into a problem for lengthy paperwork, the place the variety of underlines can rise to a whole bunch.

Our staff mentioned a number of concepts for addressing this.

At first, we thought of deferring the execution till the person stopped typing. We determined this wasn’t appropriate as a result of it might end result within the underlines being positioned within the mistaken locations for a noticeable period of time.

Subsequent, we tried to maneuver the method right into a separate thread (i.e., WebWorker), however they don’t enable entry to the DOM, which means that the most costly a part of the replace would’ve stayed in the principle thread.

We had been left with one possibility: determine a option to carry out this lengthy job in the principle thread as early as doable with out interfering with person enter.

After some prototyping, we got here up with an answer that met our necessities. We allotted a hard and fast timeframe for processing as many underlines as doable with out introducing a noticeable lag. As soon as the time runs out and a few underlines aren’t up to date, the following chunk will get scheduled utilizing window.requestAnimationFrame() API. The method continues till there are not any gadgets left unprocessed.

On this situation, the person would possibly nonetheless be typing, which means a brand new geometry replace could be essential earlier than the earlier job is totally full. To deal with this, the underlines scheduled for the replace are put right into a Set construction, making certain that we received’t run the method for a similar underline twice.

One other good thing about this strategy is that it doesn’t change the person expertise within the overwhelming majority of eventualities. Because the first computing window is synchronous, the underlines are repositioned on most setups the second a textual content change is detected.

In much less frequent circumstances with massive paperwork, the whole replace would possibly take a number of animation frames. This does go away room for some inconsistencies, however throughout our testing, these inconsistencies had been barely noticeable—even on extraordinarily lengthy paperwork.

This optimization helped us enhance enter latency by as much as 50%, relying on the kind of editor the Grammarly browser extension was built-in into.

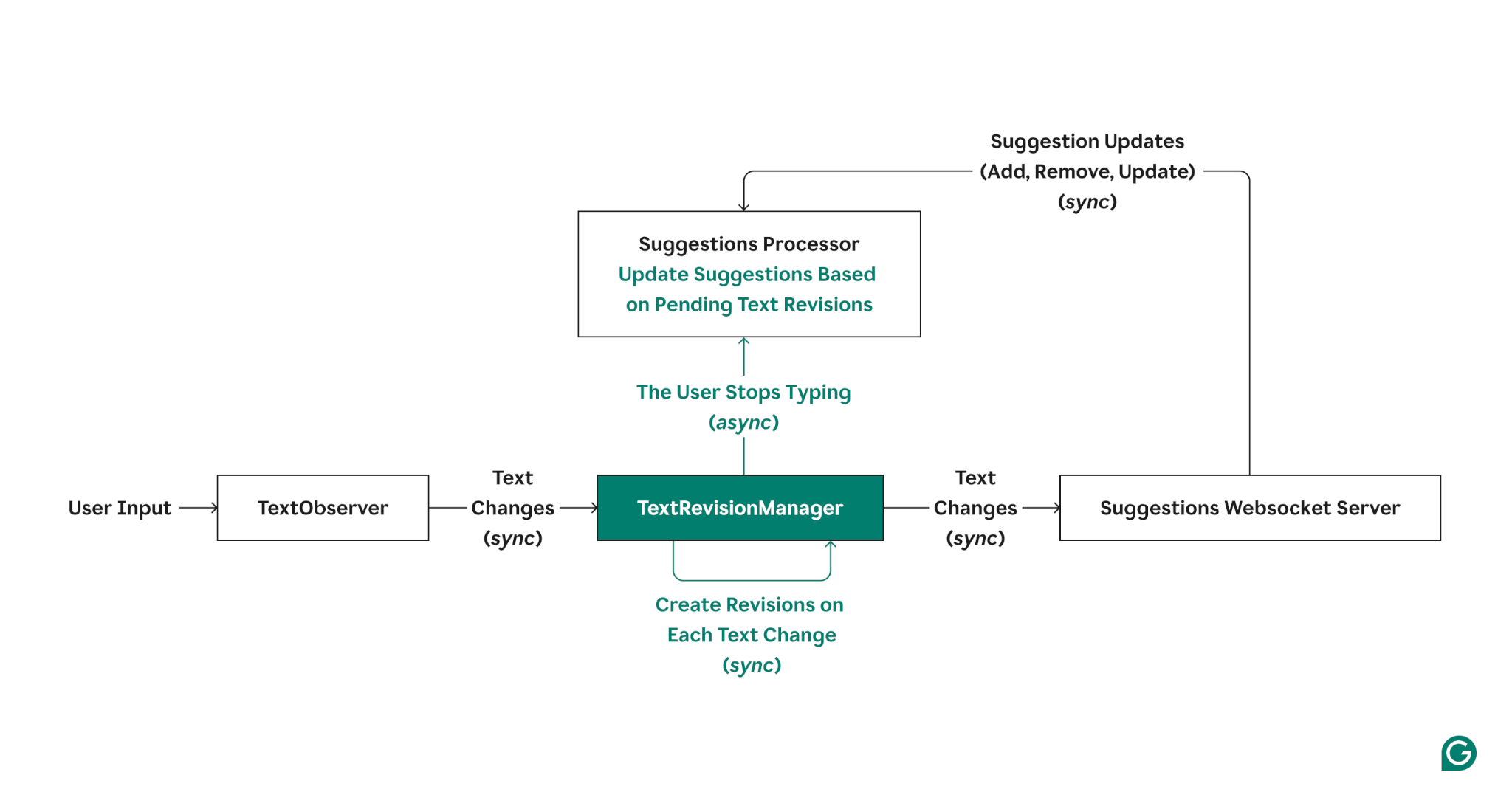

Asynchronous processing of ideas

The processing of updating ideas on textual content enter was essentially the most important contributor to enter lag as a result of it additionally triggered spotlight and DOM updates.

As a substitute of updating ideas with every textual content change, we wished to attend for the person to cease typing after which replace ideas. Customers don’t work together with ideas till they’re accomplished typing (besides in circumstances like autocorrect), so we anticipated that this strategy would work.

We wanted to revamp suggestion updates to be asynchronous. We developed an incremental answer that allowed us to use asynchronous updates when the person has stopped typing whereas nonetheless supporting different synchronous processes.

Simplified structure diagram illustrating new abstractions and ideas added to suggestion updates (highlighted in inexperienced)

On account of this redesigned structure, we had been capable of change the way in which ideas labored in just a few key methods, together with:

- The extension now buffers textual content revisions and visually updates the highlights by one character when the person is typing.

- As soon as the person stops typing, the extension processes any pending textual content revisions.

- The extension processes pending textual content revisions when any synchronous APIs are known as (till all APIs may be made asynchronous).

Outcomes and classes realized

We achieved important enhancements, each when it comes to goal efficiency and subjective person expertise.

- Total, this work diminished textual content enter lag by ~91%.

- Optimizing underlines improved enter latency by as much as 50%, relying on the kind of editor the Grammarly browser extension was built-in into.

- Customers most well-liked the brand new UX we initially constructed for efficiency causes, so this work additionally decreased the variety of customers disabling the extension by 9%.

- We acquired optimistic suggestions from Grammarly customers who had been beforehand dealing with enter lag points.

- We arrange a strong infrastructure to trace Grammarly browser extension efficiency over the long run, each within the lab and within the discipline.

Now, we’re capable of forestall regressions as we ship new person experiences.

We additionally realized some vital classes alongside the way in which, together with:

- It’s simpler to establish fast wins when tackling onerous issues. Enhancing how the Grammarly button is minimized gave us an early win and a platform to work on the extra complicated issues.

- Exploring various person experiences allowed us to delay the execution of code when customers weren’t interacting with the webpage.

- When doable, design your internet utility so it will probably run issues asynchronously and with out counting on synchronous occasions. This allowed us to break up lengthy duties and keep away from blocking the principle thread.

- Utilizing each lab-based and field-based efficiency metrics is crucial. Lab-based metrics helped us observe progress as we labored on enhancements and prevented regressions as adjustments bought merged over time. Discipline-based metrics helped us mirror the customers’ actual experiences primarily based on their completely different environments and helped us prioritize efficiency work.

Efficiency is commonly deprioritized when new options on the roadmap are being constructed—both as a result of we assume customers received’t discover comparatively slight enhancements or as a result of we assume solely the uncommon energy person hits efficiency limits. However, as we found, efficiency is a function, and important sufficient enhancements profit everybody.

What’s subsequent?

We’re nonetheless engaged on bettering the efficiency of the Grammarly browser extension, together with reminiscence and CPU utilization. We’re now measuring INP (Interplay to Subsequent Paint), for instance, to make sure we observe enter lag extra holistically within the discipline. We’re additionally engaged on breaking apart the lengthy job of updating ideas into smaller duties and exploring delegating work to an internet employee.

Enhancing efficiency is a challenge that’s by no means accomplished, and it’s one other instance of how we work to offer our customers one of the best expertise we will. If that objective resonates with you, try our open roles and take into account becoming a member of Grammarly at present.