What Is Tokenization in NLP? A Newbie’s Information

Tokenization is a vital but usually ignored element of pure language processing (NLP). On this information, we’ll clarify tokenization, its use instances, professionals and cons, and why it’s concerned in virtually each giant language mannequin (LLM).

Desk of contents

What’s tokenization in NLP?

Tokenization is an NLP methodology that converts textual content into numerical codecs that machine studying (ML) fashions can use. While you ship your immediate to an LLM corresponding to Anthropic’s Claude, Google’s Gemini, or a member of OpenAI’s GPT collection, the mannequin doesn’t instantly learn your textual content. These fashions can solely take numbers as inputs, so the textual content should first be transformed right into a sequence of numbers utilizing a tokenizer.

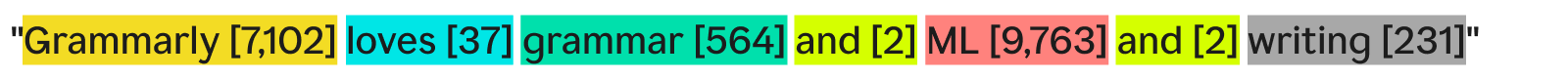

A technique a tokenizer might tokenize textual content could be to separate it into separate phrases and assign a quantity to every distinctive phrase:

“Grammarly loves grammar and ML and writing” may turn into:

Every phrase (and its related quantity) is a token. An ML mannequin can use the sequence of tokens—[7,102], [37], [564], [2], [9,763], [2], [231]—to run its operations and produce its output. This output is often a quantity, which is transformed again into textual content utilizing the reverse of this identical tokenization course of. In observe, this word-by-word tokenization is nice for example however is never utilized in business for causes we’ll see later.

One ultimate factor to notice is that tokenizers have vocabularies—the entire set of tokens they’ll deal with. A tokenizer that is aware of primary English phrases however not firm names might not have “Grammarly” as a token in its vocabulary, resulting in tokenization failure.

Sorts of tokenization

Basically, tokenization is popping a piece of textual content right into a sequence of numbers. Although it’s pure to consider tokenization on the phrase stage, there are numerous different tokenization strategies, considered one of which—subword tokenization—is the business customary.

Phrase tokenization

Phrase tokenization is the instance we noticed earlier than, the place textual content is cut up by every phrase and by punctuation.

Phrase tokenization’s principal profit is that it’s simple to know and visualize. Nevertheless, it has a number of shortcomings:

- Punctuation, if current, is hooked up to the phrases, as with “writing.”

- Novel or unusual phrases (corresponding to “Grammarly”) take up an entire token.

In consequence, phrase tokenization can create vocabularies with tons of of 1000’s of tokens. The issue with giant vocabularies is that they make coaching and inference a lot much less environment friendly—the matrix wanted to transform between textual content and numbers would must be large.

Moreover, there could be many occasionally used phrases, and the NLP fashions wouldn’t have sufficient related coaching information to return correct responses for these rare phrases. If a brand new phrase was invented tomorrow, an LLM utilizing phrase tokenization would must be retrained to include this phrase.

Subword tokenization

Subword tokenization splits textual content into chunks smaller than or equal to phrases. There is no such thing as a mounted measurement for every token; every token (and its size) is set by the coaching course of. Subword tokenization is the business customary for LLMs. Under is an instance, with tokenization finished by the GPT-4o tokenizer:

Right here, the unusual phrase “Grammarly” will get damaged down into three tokens: “Gr,” “amm,” and “arly.” In the meantime, the opposite phrases are widespread sufficient in textual content that they type their very own tokens.

Subword tokenization permits for smaller vocabularies, that means extra environment friendly and cheaper coaching and inference. Subword tokenizers may also break down uncommon or novel phrases into combos of smaller, present tokens. For these causes, many NLP fashions use subword tokenization.

Character tokenization

Character tokenization splits textual content into particular person characters. Right here’s how our instance would look:

Each single distinctive character turns into its personal token. This really requires the smallest vocabulary since there are solely 52 letters within the alphabet (uppercase and lowercase are considered completely different) and a number of other punctuation marks. Since any English phrase should be fashioned from these characters, character tokenization can work with any new or uncommon phrase.

Nevertheless, by customary LLM benchmarks, character tokenization doesn’t carry out in addition to subword tokenization in observe. The subword token “automotive” comprises far more data than the character token “c,” so the consideration mechanism in transformers has extra data to run on.

Sentence tokenization

Sentence tokenization turns every sentence within the textual content into its personal token. Our instance would appear to be:

The profit is that every token comprises a ton of knowledge. Nevertheless, there are a number of drawbacks. There are infinite methods to mix phrases to write down sentences. So, the vocabulary would must be infinite as nicely.

Moreover, every sentence itself could be fairly uncommon since even minute variations (corresponding to “as nicely” as a substitute of “and”) would imply a distinct token regardless of having the identical that means. Coaching and inference could be a nightmare. Sentence tokenization is utilized in specialised use instances corresponding to sentence sentiment evaluation, however in any other case, it’s a uncommon sight.

Tokenization tradeoff: effectivity vs. efficiency

Choosing the proper granularity of tokenization for a mannequin is known as a complicated relationship between effectivity and efficiency. With very giant tokens (e.g., on the sentence stage), the vocabulary turns into large. The mannequin’s coaching effectivity drops as a result of the matrix to carry all these tokens is big. Efficiency plummets since there isn’t sufficient coaching information for all of the distinctive tokens to meaningfully be taught relationships.

On the opposite finish, with small tokens, the vocabulary turns into small. Coaching turns into environment friendly, however efficiency might plummet since every token doesn’t include sufficient data for the mannequin to be taught token-token relationships.

Subword tokenization is true within the center. Every token has sufficient data for fashions to be taught relationships, however the vocabulary isn’t so giant that coaching turns into inefficient.

How tokenization works

Subword tokenizers should be skilled to separate textual content successfully.

Why is it that “Grammarly” will get cut up into “Gr,” “amm,” and “arly”? Couldn’t “Gram,” “mar,” and “ly” additionally work? To a human eye, it undoubtedly might, however the tokenizer, which has presumably realized probably the most environment friendly illustration, thinks otherwise. A standard coaching algorithm (although not utilized in GPT-4o) employed to be taught this illustration is byte-pair encoding (BPE). We’ll clarify BPE within the subsequent part.

Tokenizer coaching

To coach an excellent tokenizer, you want a large corpus of textual content to coach on. Operating BPE on this corpus works as follows:

- Cut up all of the textual content within the corpus into particular person characters. Set these because the beginning tokens within the vocabulary.

- Merge the 2 most incessantly adjoining tokens from the textual content into one new token and add it to the vocabulary (with out deleting the outdated tokens—that is vital).

- Repeat this course of till there are not any remaining incessantly occurring pairs of adjoining tokens, or the utmost vocabulary measurement has been reached.

For example, assume that our complete coaching corpus consists of the textual content “abc abcd”:

- The textual content could be cut up into [“a”, “b”, “c”, “ ”, “a”, “b”, “c”, “d”]. Notice that the fourth entry in that checklist is an area character. Our vocabulary would then be [“a”, “b”, “c”, “ ”, “d”].

- “a” and “b” most incessantly happen subsequent to one another within the textual content (tied with “b” and “c” however “a” and “b” win alphabetically). So, we mix them into one token, “ab”. The vocabulary now seems to be like [“a”, “b”, “c”, “ ”, “d”, “ab”], and the up to date textual content (with the “ab” token merge utilized) seems to be like [“ab”, “c”, “ ”, “ab”, “c”, “d”].

- Now, “ab” and “c” happen most incessantly collectively within the textual content. We merge them into the token “abc”. The vocabulary then seems to be like [“a”, “b”, “c”, “ ”, “d”, “ab”, “abc”], and the up to date textual content seems to be like [“abc”, “ ”, “abc”, “d”].

- We finish the method right here since every adjoining token pair now solely happens as soon as. Merging tokens additional would make the ensuing mannequin carry out worse on different texts. In observe, the vocabulary measurement restrict is the limiting issue.

With our new vocabulary set, we are able to map between textual content and tokens. Even textual content that we haven’t seen earlier than, like “cab,” might be tokenized as a result of we didn’t discard the single-character tokens. We will additionally return token numbers by merely seeing the place of the token throughout the vocabulary.

Good tokenizer coaching requires extraordinarily excessive volumes of information and loads of computing—greater than most firms can afford. Firms get round this by skipping the coaching of their very own tokenizer. As an alternative, they simply use a pre-trained tokenizer (such because the GPT-4o tokenizer linked above) to avoid wasting money and time with minimal, if any, loss in mannequin efficiency.

Utilizing the tokenizer

So, we have now this subword tokenizer skilled on a large corpus utilizing BPE. Now, how can we apply it to a brand new piece of textual content?

We apply the merge guidelines we decided within the tokenizer coaching course of. We first cut up the enter textual content into characters. Then, we do token merges in the identical order as in coaching.

As an instance, we’ll use a barely completely different enter textual content of “dc abc”:

- We cut up it into characters [“d”, “c”, “ ”, “a”, “b”, “c”].

- The primary merge we did in coaching was “ab” so we try this right here: [“d”, “c”, “ ”, “ab”, “c”].

- The second merge we did was “abc” so we try this: [“d”, “c”, “ ”, “abc”].

- These are the one merge guidelines we have now, so we’re finished tokenizing, and we are able to return the token IDs.

If we have now a bunch of token IDs and we wish to convert this into textual content, we are able to merely search for every token ID within the checklist and return its related textual content. LLMs do that to show the embeddings (vectors of numbers that seize the that means of tokens by wanting on the surrounding tokens) they work with again into human-readable textual content.

Tokenization purposes

Tokenization in LLMs

Tokenization in search engines like google and yahoo

Tokenization in machine translation

Machine translation tokenization is fascinating for the reason that enter and output languages are completely different. In consequence, there can be two tokenizers, one for every language. Subword tokenization often works greatest because it balances the trade-off between mannequin effectivity and mannequin efficiency. However some languages, corresponding to Chinese language, don’t have a linguistic element smaller than a phrase. There, phrase tokenization is named for.

Advantages of tokenization

Tokenization lets fashions work with textual content

Tokenization generalizes to new and uncommon textual content

Or extra precisely, good tokenization generalizes to new and uncommon textual content. With subword and character tokenization, new texts might be decomposed into sequences of present tokens. So, pasting an article with gibberish phrases into ChatGPT received’t trigger it to interrupt (although it might not give a really coherent response both). Good generalization additionally permits fashions to be taught relationships amongst uncommon phrases, based mostly on the relationships within the subtokens.